Introduction

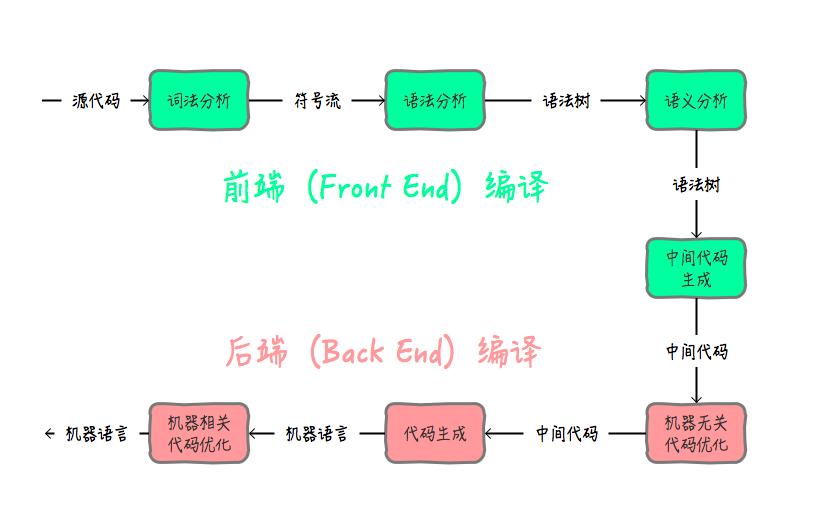

Before delving into JIT, it’s essential to have a basic understanding of the compilation process.

In compiler theory, translating source code into machine instructions generally involves several crucial steps:

JIT Overview

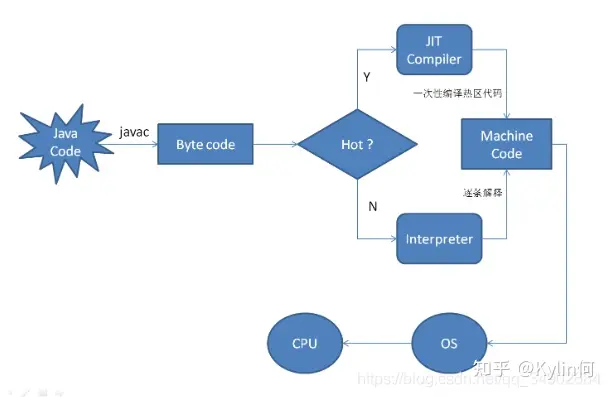

JIT stands for Just-In-Time compiler. Through JIT technology, it’s possible to accelerate the execution speed of Java programs. But how is this achieved?

Java is an interpreted language (or semi-compiled, semi-interpreted language). Java compiles the source code into platform-independent Java bytecode files (.class) using the javac compiler. These bytecode files are then interpreted and executed by the Java Virtual Machine (JVM), ensuring platform independence. However, interpreting bytecode involves translating it into corresponding machine instructions, which inevitably slows down the execution speed compared to directly executing binary bytecode files.

To enhance execution speed, JIT technology is introduced. When the JVM identifies a method or code block that is executed frequently, it recognizes it as a “Hot Spot Code.” JIT compiles these “Hot Spot Codes” into native machine-specific machine code, optimizes it, and caches the compiled machine code for future use.

Hot Spot Compilation

When the JVM executes code, it doesn’t immediately start compiling it. There are two main reasons for this:

Firstly, if a piece of code is expected to be executed only once in the future, compiling it immediately is essentially a waste of resources. Compiling code into Java bytecode is much faster than both compiling and executing the code.

However, if a piece of code, such as a method call or a loop, is executed multiple times, compiling it becomes worthwhile. The compiler has the ability to discern which methods are frequently called to ensure efficient compilation. Hot Spot VM employs JIT compilation technology to directly compile high-frequency bytecode into machine instructions (with the method as the compilation unit). These compiled machine instructions are executed directly when bytecode is JIT-compiled, providing a performance boost.

The second reason involves optimization. As a method or loop is executed more frequently, the JVM gains a better understanding of the code structure. Therefore, the JVM can make corresponding optimizations during the compilation process.

How JavaScript is Compiled - How JIT (Just-In-Time) Compiler Works

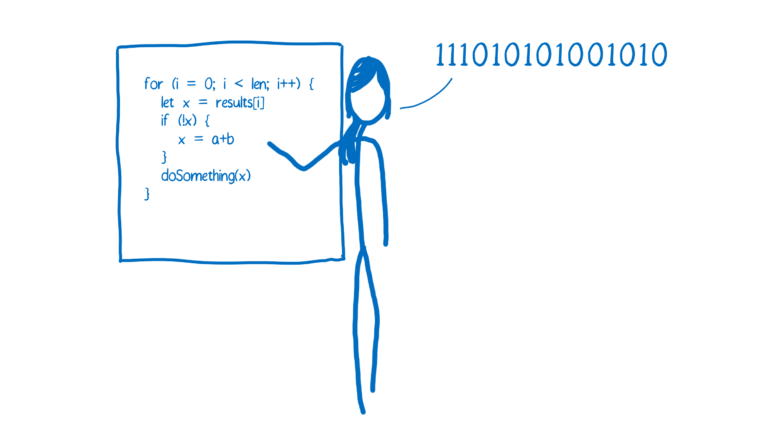

In general, there are two ways to translate programs into machine-executable instructions: using a Compiler or an Interpreter.

Interpreter

An interpreter translates and executes code line by line as it encounters it.

Pros:

- Fast execution, no compilation delay.

Cons: - Same code might be translated multiple times, especially within loops.

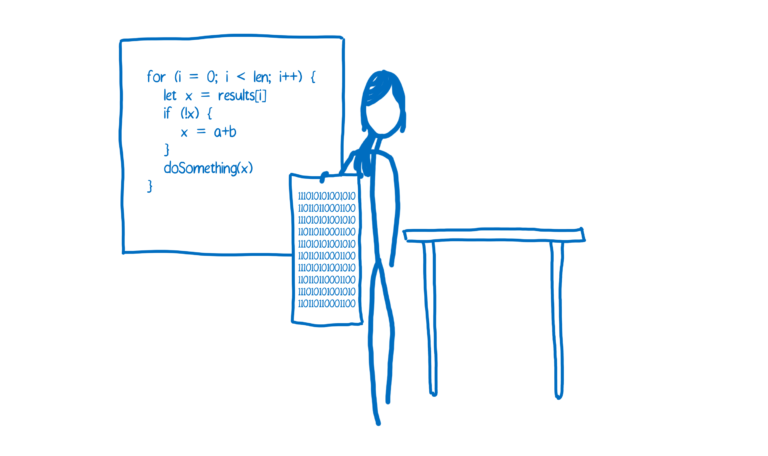

Compiler

A compiler translates the code in advance and generates an executable program.

Pros:

- No need for repeated compilation; can optimize code during compilation.

Cons: - Requires upfront compilation.

JIT

When JavaScript first emerged, it was a typical interpreted language, resulting in slow execution speeds. Later, browsers introduced JIT compilers, significantly improving JavaScript’s execution speed.

Principle: They added a new component to the JavaScript engine, known as a monitor (or profiler). This monitor observes the running code, noting how many times it runs and the types used.

In essence, browsers added a monitor to the JavaScript engine to observe the running code, recording how many times each code segment is executed and the variable types used.

Now, why does this approach speed up the execution?

Let’s consider a function for illustration:

function arraySum(arr) {

var sum = 0;

for (var i = 0; i < arr.length; i++) {

sum += arr[i];

}

}1st Step - Interpreter

Initially, the code is executed using an interpreter. When a line of code is executed several times, it is marked as “Warm,” and if executed frequently, it is labeled as “Hot.”

2nd Step - Baseline Compiler

Warm-labeled code is passed to the Baseline Compiler, which compiles and stores it. The compiled code is indexed based on line numbers and variable types (why variable types are important will be explained shortly).

When the index matches, the corresponding compiled code is directly executed without recompilation, eliminating the need to recompile already compiled code.

3rd Step - Optimizing Compiler

Hot-labeled code is sent to the Optimizing Compiler, where further optimizations are applied. How are these optimizations performed? This is the key: due to JavaScript’s dynamic typing, a single line of code can have multiple possible compilations, exposing the drawback of dynamic typing.

For instance:

sumis Int,arris Array,iis Int; the+operation is simple addition, corresponding to one compilation result.sumis string,arris Array,iis Int; the+operation is string concatenation, requiring the conversion ofito a string type.

…

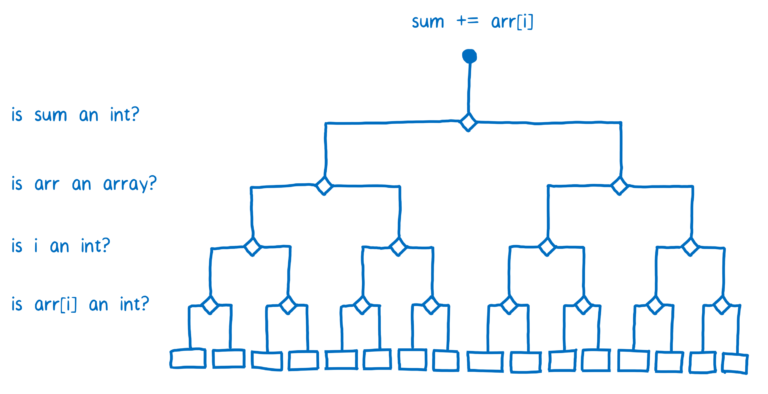

As illustrated in the diagram below, such a simple line of code has 16 possible compilation results.

The Baseline Compiler handles this complexity, and thus, the compiled code needs to be indexed using both line numbers and variable types. Different variable types lead to different compilation results.

If the code is “Warm,” the JIT’s job ends here. Each subsequent execution involves type checks and uses the corresponding compiled result.

However, when the code becomes “Hot,” more optimizations are performed. Here, optimization means JIT makes a specific assumption. For example, assuming sum and i are both Integers and arr is an Array, only one compilation result is needed.

In practice, type checks are performed before execution. If the assumptions are incorrect, the execution is “deoptimized,” reverting to the interpreter or baseline compiler versions. This process is called “deoptimization.”

As evident, the speed of execution relies on the accuracy of these assumptions. If the assumption success rate is high, the code executes faster. Conversely, low success rates lead to slower execution than without any optimization (due to the optimize => deoptimize process).

Conclusion

In summary, this is what JIT does at runtime. It monitors running code, identifies hot code paths for optimization, making JavaScript run faster. This significantly improves the performance of most JavaScript applications.

However, JavaScript performance remains unpredictable. To make it faster, JIT adds some overhead at runtime, including:

Optimization and Deoptimization

- Memory for monitoring and recovering lost information

- Memory for storing baseline and optimized versions of functions

There’s room for improvement, notably in eliminating overhead to make performance more predictable.